Day 0

Long time ago, I happen to read book of Minsky on Frame theory, then book on Perceptron invented by Frank Rosenblatt. Then there were many popular science books on AI, Neural Networks. Then in the University I was programming perceptron with hidden layers with FFT as convolution function and that was 30 years ago. Then there was gap in a scientific work and then came WWW world and I have switched to it completely and forgot about AI.

Those days AI theme was not popular as there was no commercial interest and no good algorithms to train them. Things are changed now. So I tried to do an experiment and try to use ANNs using today's technologies and available tools to investigate if I can predict a behavior of the cloud network. I'll dig into the subject in the following days, will select type of the ANN, tools and will teach the ANN and will try to get interesting results.

All the process will be written here in the evolving blog. I think that evolving blog is most relevant type of blog when you learn and try something new. Blogs which mainly are present on internet look mostly like articles or lectures where blogger teaches the reader something which he knows very well. In my opinion Blog is literally biographical log or "weblog" as it is defined in Wikipedia. It should be written in a diary style were events are documented in a sequence as they appear.

Thinking process

Day 1 - 17 June 2017

Today's computer systems tend to grow very fast. They are heterogeneous and large, and will grow in number of nodes and complexity. Probably in very near future the scale of individual systems may reach the current size of the Internet and probably will continue growing endlessly until the change of paradigm. Control and prediction of macroscopic behavior of such a systems is the question which is of the utmost importance for everyone. It is important for individuals, for small private companies and intercontinental corporations, for governments.

While this theme is enormously large, deep and there is no still science behind it I will try to understand where we stand and express my humble vision on the subject.

It is not scientific work, please do not expect here any formulas(joke 😉) or cumbersome sentences written in scientific-like manner. The article is written in a free manner by just simply concentrating on the selected subject and looking sometime on the google search results...

So, straight to the matter:

What do we have today:

We have large networks, - clouds with excellent infrastructure provided by companies like Amazon, Google, Microsoft etc. They all do provide monitoring and logging tools which are largely available in the private and public cloud environments, but are not sufficient to tell reliably what will happen with the network even within several minutes. Why? Simply because each system or its part has physical implementation and every physical thing is subject to laws of physics. The parts of such systems will most probably degrade and stop working after some time. So at the very bottom of the system there is a physical layer which will cause instability. Further looking at the systems we can discover higher levels and each of them will have eventually issues caused by "software and hardware bugs". Main source of "miss-behavior" are "bugs and errors" which can be measured by probability. So far we are fine, we can predict probability of stability of our large system and that they will work as we expect. What ?

What is the meaning of "expected work"? How do we know that large system with millions and may be billions of nodes will work as we expect?

It is not a fact that small 2-3 nodes system when scaled to millions of nodes will have same properties and will work reliably. The bugs and errors in cluster control system may change its behavior. Not that it will just stop responding, but it may lead to unexpected results which is far more dangerous then the system which just became very slow or stopped working. For example response of the service may show erroneous result!

Where we can find the solution for this issue? Big reliable systems do exists already everywhere. Those are: big physical systems(Earth, Solar system), biological systems (colony of ants, animal body, brain etc).

From the fact that these systems are reliable may mean at least 2 things:

1. System is based on very basic physical low and is very simple at its base and such systems are stable by its nature

2. Or, if system is very complex, and even, human made, it must have its own mechanisms of predicting and preventing miss-behavior.

There are 2 large classes of the solutions deterministic and non-deterministic.

We can look for mathematical models, use discrete mathematics etc. But still we will not find ready solution. There are several proposals and articles, which can be found in internet.

For example one of the latest proposals is Measurement Science for Complex Information Systems where system behavior is proposed to be measured via building mathematical models by leveraging them from physical science.

Imitation modeling - where big computer system or real world physical system will be imitated by means of another simplified software system (Imitation model). This type of modeling is more intuitive than pure mathematical models with equations. Observer can see what happens with the model when it grows. But it needs deep knowledge of the system architecture to build an imitation model of it and then program it.

Close to Imitation modeling are standing ML tools based on Artificial Neural Network. It is believed that our brain gist is its Connectome ,- the brain wiring which can be modeled by the Artificial Neural Network - ANN. It is very popular in Computer Business world.

Artificial Neural Network based tools are main engines of success of Technological revolution today.

It is neither panacea no alchemy stone. ANN is very convenient, intuitive, very easy to setup tool. The main issue is to select proper type of the ANN and train it. ANN can solve many classes of tasks starting from classification of images, sounds, events, finding hidden relations between different facts to natural language conversation and learning how to play such a complex games as "Chess", "Go" and even learning how to play and win any game without "seeing it before". Number and complexity of applications where it can be applied is endless and it is here within a reach of anyone. You do not need high mathematical education or science degree to use it. The main property of ANN as I see it, is that it acts as a "mirror" which reflects into its inner structure the outer world. It is possible to build abstraction hierarchies of very high level with ANN.

DAY 2 - 18 June 2018

Can we use Neural Network to predict the behavior of the cloud? Lets check what types of ANNs we have, then select one particular type and find the supplier of the selected ANN. Then we can do some experiments.

So, what types of ANNs are currently known?

Lets have a look at Wikipedia article on ANN. ANN consists of nodes, connections between them and Activation function. Thses are the main things which define its Architecture. There is a good overview of some of the architectures of ANN here -> Architecture of ANN. Most common type of Architecture is FF (Feed-Forward) where input nodes are connected to one or more nodes of next hidden layer or to the last output layer. FF ANN with hidden layers are good at recognizing speech, but they are not so good in predicting the sequence.

Then there are recurrent networks (RNN) where information flows in cycles, it is allowing to remember information for a long time. They are difficult to train, but they are very good at predicting the sequence, because its layers have feedback connection from previous slot of time, thus aggregating information on the past states. Because of this it can be trained to predict next character in text, for example. After training on thousands of English books this RNN can start generating an English text one character at a time. RNNs are used to predict stock exchange prices (!) and human behavior.

There are many other types of ANNs, but, currently, I will stop my choice on RNN as it looks like it suits my needs as it can help us to predict "the future" state of our cloud.

I need to define the Input data. Cloud is set of interconnected nodes orchestrated by some master nodes. Each node any given time is in some state.

Lets define the momentary "state" of the cloud network as the vector of parameters with predefined set of values:

Node Readiness: Down/Up - 0/1,

Load of the node - 4 states: 1 - below 25%, 2 - below 75%, 3 - below 90%, 4 - between 90 and 100%

Each node will have 5 possible states.

Last moment I have decided to add one more parameter which is presence of Errors in the log. Lets imagine that we have an error indicator that any given time can be read by our RNN. Error indicator will be also 1 or 0. 1 - when there is some error in the log and 0 when there are no errors.

Lets try to define our RNN structure. In general it will have 3 layers. Input, Hidden inner layer and Output layer. Input layer will have X number of nodes and same number of nodes will have an output layer. Input number of nodes will be defined by the state of the cloud. For the simplicity lets assume that we are looking at the cloud through small window which can grasp status of 100 nodes only.

Each node has state S. To represent the status of the cloud we will need some form of combination of state values and node number. E.g. 80141, where 80 is serial number of the node, 1 - Node is up and running, 4 - its load is between 90 to 100% and 1 - it has errors in application log. So for our 100 node window of the cloud we will have 100 node states. How to feed all these 100 states of 5 digit numbers into input layer of the RNN at same moment of time? How many input neurons do we need? A lot !

What if we optimize input data presentation?

Each node of cloud may have following states:

010

011

020

021

030

031

040

041

110

111

120

121

130

131

140

141

First 8 states we can treat as just one state - 0. It is because first 0 means that node is down and other parameters do not have any meaning in this case.

That means that we are left with 9 meaningful states and we can encode them as 0..8. That is much better.

Now lets decide on how we will feed the data to the RNN. Shall we feed each node state one at a time or shall we pass all 100 nodes all together?

In former case we can feed all states of the cloud one by one and not only 100, in latter case we need 400 input neurons to pass data for 100 cloud nodes.

Tomorrow I will try to find an effective solution or may be will decide to select better architecture of the ANN.

Day 3 - 19 June 2018

Day of procrastination 😆. BTW, is there an international day of procrastination?

After fast search on internet I found that such a day exist, but it is 25 of March. I'll establish today's date for myself as The Procrastination day of Blogger.

Day 4 - 20 June 2018

Lets try to formulate again the task and what we want to achieve.

We have a cloud with thousands of nodes. Architecture and inner logic and behavior are not known to us. We want to predict when malfunctions in the cloud may happen.

To achieve this we have selected the only possible tool is ANN and defined that status of the cloud is a number which encodes several parameters of the node: Readiness, Load and Error presence in the logs of the node.

We need to choose the type of ANN, its architecture, train it on previous set of statuses, test it and run.

To be continued tomorrow....

Day 5 - 25 June 2018

Sometimes tomorrow comes in 5 days, which is better then never...

While I have a big gap in knowledge in the field of AI/NN I am not loosing an optimism.

5 days ago I learned that Recurrent Neural Networks are best for solving prediction tasks or other sequence tasks when length of the input is variable.

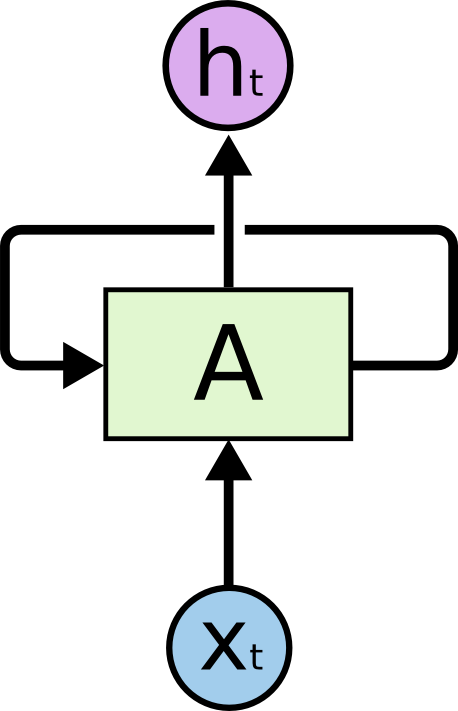

So for those readers who are programmers it reminds loop. This diagram can be "unlooped" and it will become a sequence of neurons.

That means on each step this RNN takes into account all the previous elements of the input sequence. But, in reality it is very difficult to train it for handling dependencies with the events happen long time ago (Sepp Hochreiter 1991 Thesis).

The better RNN is LSTM network. LSTM - Long Short Term Memory networks are introduced by Hochreiter & Schmidhuber and are capable of learning long-term dependencies. LSTM networks are explained very well in Understanding LSTMs.

Next step will be to find a convenient environment and good examples to learn LSTM.

No comments:

Post a Comment